Table of Contents

Conventional Data Center Networks

Conventional Data Center Networks which includes Data Servers and associated components like telecommunications and storage system.

- Network which consists of one or more data network, storage network and management network.

- Server centric physical design networks

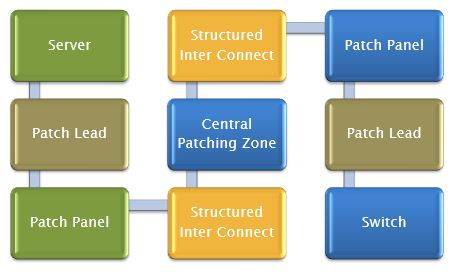

- Typical server may have 5 network ports to be connected. (Patch Lead, Rack Patch Panel, Structured Inter connect, Center Patch Panel, Switch)

Pitfalls in conventional Data Center networks

- Expensive, Inflexible, complex and prone to error

- Changing the server from one network to another requires human intervention

- Troubleshooting is manual and tedious. Most of the time issues turns out to be cabling error or patch panel failures

- Not able to scale up with evolution of 10G and 40G in Gigabit Ethernet world

Conventional Data Center Networks

Types Of Data Center Networking

New generation Data Center requirements

- With the migration from 1Gig to 10 Gig, new cabling and network switching architectures should help in ensuring a cost-effective and smooth data center transition.

- Data center should be able to respond to growth and changes in equipment, standards, and demands while remaining manageable and reliable.

- Modularity and Flexibility : Network organizers looks for server vendors who provide preconfigured racks of equipment with integrated cabling and switching infrastructure.

- I/O Connectivity options : Rack equipped with 10G Ethernet or Unified network Fabric with Fiber Channel Over Ethernet (FCoE).

- Virtualized Computer Platform : Virtualization is one of the main areas of focus for IT decision makers.

- High Availability for management and control plane.

- Effective segmentation ( Management, Data and control plane) for security compliance and regulatory compliance.

Types of Data Center Architectures

End Of Row Switching

- Traditional Data Center network approach , change in equipments alone.

- Most of the servers in different racks gets connected to a larger Chassis based Switches which support higher number of 1G ports.

- Cost effective in terms of port utilization.

- Major pitfall is that all the servers directly connected to the Chassis line cards.

- Redundant chassis can’t be easily integrated into this method.

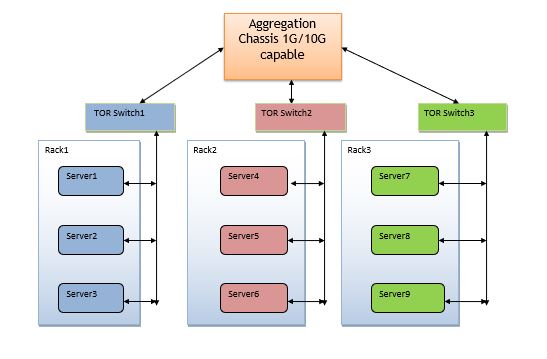

Top of Rack Switching

- One or multiple switches are places in each Rack. All the servers on the rack get connected to this top of rack switch.

- From the top of rack switch one single uplink is driven towards the aggregation layer.

- Redundancy can be easily achieved by including one more TOR switch on each rack and one additional link connecting towards the aggregation for network redundancy.

- It provides architectural advantage like port to port switching for servers within a single rack , Predictable over subscription and smaller switching domains.

- Easy to isolate the failures , Containment of failures takes less time.

Evolution of ToR

Top of Rack Switching

- Major bottleneck with this model is that , everything needs to be preconfigured.

- Requires services like security applications deep into the network to avoid data theft or hackers intrusion.

Integrated Switching

- Specifically meant for Blade Servers environment.

- Integrated I/O of Ethernet , FCoE on a single platform.

- Simplifies infrastructure and increase manageability.

- Can support only limited number of servers.

Recommendations

- Out of these different types depending upon the data center capability (number of servers and applications) , any one of these architecture can be selected.

- Most of the switch vendors suggests to go for Top Of Rack architecture which is scalable and simple to manage with higher amount of cost savings.

- Enterprise network group in it’s 2010 release noted TOR as best option for new generation Data Center requirement.

Typical Top Of Rack Design

Technologies For Data Center

List of Technologies for Data Center

- 10G Base T

- Next Gen Ethernet Network Interface (NIC)

- Low Latency Ethernet switching

- Data Center Bridging eXchange (DCBX)

- 40 Gbps and 100 Gbps Ethernet

- Energy Efficient Ethernet

- Class Based Pause Frames

- Congestion Notification

- Enhanced Transmission Selection

- Shortest Path Bridging

- Fiber Channel Over Ethernet

10G Base T :

- 10 Gbps connections over unshielded or shielded twisted pair copper cables.

- Allows gradual transition from 1000 Base T to 10G Base T without major cabling changes.

- Lower cost than any Fiber based 10G solution.

Low Latency Ethernet switching

- Cut through layer2 switching is required to reduce the port to port latency and overall end to end network latency.

- Cut through also reduces the serialization time in multi hop networks.

- Layer2 data center connectivity is very reliable when Servers and Switches both support 10G.

- It has the disadvantage of forwarding error packets into the network.

Next Gen Ethernet Network Interface (NIC)

- Hardware acceleration for TCP/IP & Allows I/O virtualization.

- Fall back option (to host based processing) when interacting with legacy application.

- CPU utilization needs to be less than 10% during 10G file transfers.

- Reduced End to End Latency at 5-10 micro seconds.

Data Center Bridging eXchange (DCBX)

- Uses LLDP protocol to exchange Data Center Bridging capability like End Point Congestion notification, Enhanced Transmission Selection , Priority flow control.

- This information would help other network elements to understand the peer capability for achieving Data Center Bridging.

40 Gbps and 100 Gbps Ethernet

- Requirement for Ethernet bandwidth above 10G is common nowadays in Data center related to content and service providers.

- Growing demand for online video (YouTube , Yahoo Videos) content is driving the need for 40 and 100G.

- YouTube currently delivers 2.9 billion online videos per Year in USA alone.

- IEEE 802.3ba has already approved 40G and 100G.

- Almost all equipment vendors new generation product line is 40G capable.

Energy Efficient Ethernet

- Ethernet Cards utilize same amount of power independent of traffic load or network utilization.

- According to Gartner group study, the annual costs of data center power and cooling can exceed the CAPEX of servers.

- IEEE P802.3az is working on a specification to reduce the power of links while the ports are in idle state or operating under a lighter load.

- Introduces a new Low Power Idle state which will monitor the port utilization and power consumption in an inclusive manner.

Class Based Pause Frames

- Traditional pause frames are used to inform next hop network elements to reduce the rate of traffic. Pause frames generated here are generic to port.

- IEEE is currently framing new standard where in the class of service can be informed along with the pause frames.

- Once the COS is known to the next hop network element, it will only reduce the load for the corresponding COS and doesn’t disturb rest of the services

- In this way uncongested classes can continue transmission across the link

Congestion Notification

- Existing congestion detection mechanism is local to individual switch or router. It doesn’t get into the overall network alignment.

- This behavior causes unwanted impact on other part of the network. This behavior can be observed in cases where common router distributes traffic across 2 tiers.

- QCN congestion management introduced by 802.1Qau is trying to resolve this problem.

- Can support only limited number of servers.

- QCN will help network elements to realize the congestion in a well defined manner and generate pause frames or rate limit the traffic accordingly.

- QCN along with class based pause frames will result in end to end congestion management.

Enhanced Transmission Selection

- Existing QoS scheduling algorithms are fixed nature. Means once we have configured Strict priority mode with specified bandwidth for specific class, we always try to service that specific class even when there is no traffic on that class.

- When the offered load in a traffic class doesn’t use it’s allocated bandwidth, Enhanced Transmission Selection allows other traffic classes to use the bandwidth.

- Use of a consolidated network will realize operation and equipment cost benefits.

- This feature allows uniform management of bandwidth between classes.

Shortest Path Bridging

- Current spanning tree based forwarding model is not able to meet the Data Center need of convergence in sub seconds.

- IEEE is working on Shortest Path Bridging concept where ISIS and MAC Learning works together.

- ISIS Link State data base is used to frame unicast and multicast FDB tables.

- TRILL is an equivalent feature to SPB and it is also used to reduce the convergence in Data Center Network.

Fiber Channel Over Ethernet

- Intended to increase the ability of Ethernet to serve as unified switching fabric.

- Requires Lossless Ethernet feature

- FCoE and FIP are 2 protocols which are driving the unified fabric concept.

- FIP is the control plane protocol. It is used to discover the FCoE entities connected to an Ethernet network and to establish and maintain Virtual Links for FCoE.

Technology Mapper

| Technology | IEEE/IETF Tracker | Solution Type |

|---|---|---|

| 10G Base T | 802.3an | Hardware |

| Next Gen NIC | NA | Hardware/Software |

| Low Latency Ethernet Switching | 802.1 | ASIC (L2 Engine) |

| 40G and 100G | 802.3ba | Hardware |

| Energy Efficient Ethernet | P802.3az | Power Controller Firmware |

| Class Based Pause Frames | P802.3x | ASIC ( Buffer Management) |

| Congestion Notification | P802.1Qau | ASIC ( Buffer Management) |

| Enhanced Transmission Selection | P802.1Qaz | ASIC ( Buffer Management) |

| Shortest Path Bridging/TRILL | 802.1aq | Software |

| Fiber Channel Over Ethernet | T11 working Group | Software/ASIC |

| Data Center Bridging Capability Exchange Protocol | 802.1Qaz | Software |

For more about Data Center Bridging

- Overview of Data Center Bridging : www.docs.microsoft.com/en-us/windows…/overview-of-data-center-bridging

I’ve learned some new things through your blog. One other thing I’d like to say is the fact that newer computer os’s have a tendency to allow far more memory to be utilized, but they additionally demand more storage simply to run. If a person’s computer is unable to handle extra memory along with the newest software requires that memory space increase, it usually is the time to buy a new Laptop. Thanks