Table of Contents

Usability testing

Usability testing is a technique used to evaluate a product by testing it with representative users. In the test, these users will try to complete typical tasks while observers watch, listen and takes notes.

Goal of usability testing is to identify any usability problems, collect quantitative data on participants’ performance (e.g., time on task, error rates), and determine participant’s satisfaction with the product.

Usability testing – 4 key things

Testing the Site NOT the Users

- We try hard to ensure that participants do not think that we are testing them. We help them understand that they are helping us test the prototype or Web site.

Performance vs. Subjective Measures

- We measure both performance and subjective (preference) metrics. Performance measures include: success, time, errors, etc. Subjective measures include: user’s self reported satisfaction and comfort ratings.

- People’s performance and preference do not always match. Often users will perform poorly but their subjective ratings are very high. Conversely, they may perform well but subjective ratings are very low.

Make Use of What You Learn

- Usability testing is not just a milestone to be checked off on the project schedule. The team must consider the findings, set priorities, and change the prototype or site based on what happened in the usability test.

Find the Best Solution

- Most projects, including designing or revising Web sites, have to deal with constraints of time, budget, and resources. Balancing all those is one of the major challenges of most projects.

Usability testing – Stages

- Develop Test Plan

- Creating Test Task scenarios

- Test Setup

- Conduct Usability testing

- Test Metrics

- Data Analysis & Reporting

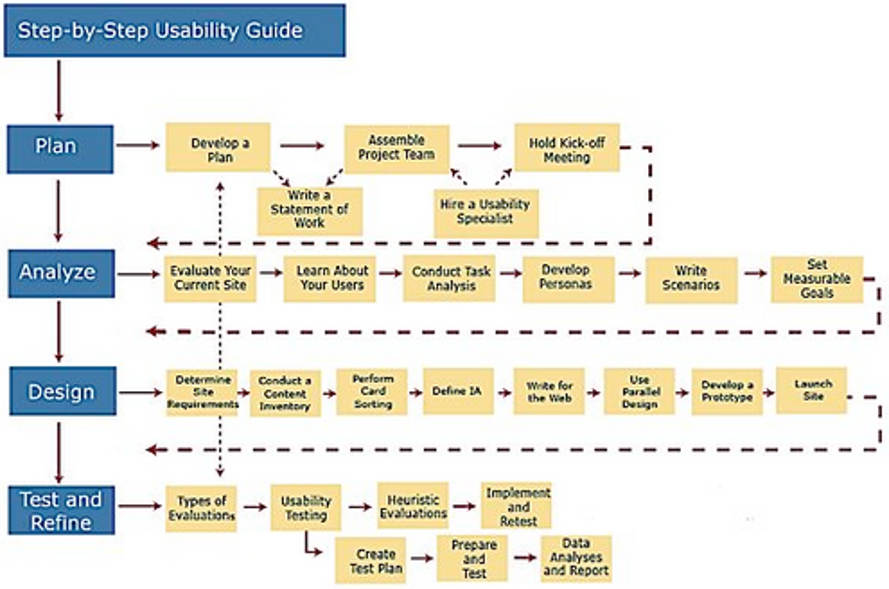

Usability testing – Step by step guide

Develop a test plan

- The first step in each round of usability testing is to develop a plan for the test. The purpose of the plan is to document what you are going to do, how you are going to conduct the test, what metrics you are going to capture, number of participants you are going to test, and what scenarios you will use.

- Typically, the usability specialist meets with the rest of the team to decide on the major elements of the plan. Often, the usability specialist then drafts the plan, which circulates to management and the rest of the team. Once everyone has commented and a final plan is negotiated, the usability specialist revises the written plan to reflect the final decisions.

Plan Elements

Scope

Indicate what you are testing: Give the name of the Web site, Web application, or other product. Also specify how much of the product the test will cover (e.g. the prototype as of a specific date; the navigation; navigation and content).

Purpose

Identify the concerns, questions, and goals for this test. These can be quite broad; for example, “Can users navigate to important information from the prototype’s home page?” They can be quite specific; for example, “Will users easily find the search box in its present location?”

In each round of testing, you will probably have several general and several specific concerns to focus on. Your concerns should drive the scenarios you choose for the usability test.

Schedule & Location

Indicate when and where you will do the test. You may want to be specific about the schedule. For example, you may want to indicate how many sessions you will hold in a day and exactly what times the sessions will be. (You will need that information to recruit participants, so you must decide the schedule early on.)

Sessions

You will want to describe the sessions, the length of the sessions (typically one hour to 90 minutes). When scheduling participants, remember to leave yourself a little time between session to reset the environment and to briefly review the session with observer.

Equipment

Indicate the type of equipment you will be using in the test. Include information about the computer system, monitor size and resolution, operating system, browser etc. Also indicate if you are planning on videotaping or audio taping the test sessions or using any special usability testing tools.

Participants

Indicate the number and types of participants to be tested you will be recruiting. Describe how these participants are recruited.

Scenarios

Indicate the number and types of tasks included in testing. Typically you will end up with approximately 10 scenarios. You may want to include more in the test plan so the team can choose the appropriate tasks.

Metrics

Subjective metrics: Include the questions you are going to ask the participants prior to the sessions (e.g., background questionnaire), after each task scenario is completed (satisfaction questions about interface facilitations of the task), and overall satisfaction questions when the sessions is completed.

Quantitative metrics: Indicate the quantitative data you will be measuring in your test (e.g., successful completion rates, error rates, time on task).

Roles

Include a list of the staff who will participate in the usability testing and what role each will have. The usability specialist should be the facilitator of the sessions. The usability team may also provide the primary note-taker. Other team members should be expected to participate as observers and, perhaps, as note-takers.

Creating Test Task Scenarios

- To create good task scenarios, you will have to identify the top tasks that users try to complete when visiting your site. You may also have to identify scenarios or test items that you think may be usability issues to see if they really are. Good scenarios have a goal, what is the user to do, what question are they trying to find the answer to, or what data do they need to complete the task.

- Keep the scenarios short so that participants don’t need to read a lot and scenarios are easy to understand. Do not include information about how to complete the task. You will need to create about ten scenarios for a typical one-hour test.

- Record Completion Paths

- You will need to record the task scenario completion paths. Having the completion paths will help the observers and note-takers know what to expect and how to complete the task. Participants should not see the paths.

- Pilot Test

- Always try your scenarios out in a pilot test. If you find that your ‘trial’ participants do not understand a scenario then you will need to rewrite it. After you rewrite the scenario, run a second pilot test

Test Setup

Make sure you have everything prepared and checked prior to the test sessions. If you are concerned, then do a dry run by checking the equipment and materials or a pilot test with a volunteer participant.

The pilot test allows you:

- to test the equipment and provides practice for the facilitator and note-takers

- to get a good sense whether your questions and scenarios are clear to the participant

Run the pilot test a few days prior to the first test session so that you have time to change the scenarios or other materials if necessary.

Conduct usability test

- The facilitator will welcome the participant and invite the participant to sit in front of the computer where they will be working. The facilitator explains the test session, asks the participant to sign the video release form, and asks the profile (demographic) questions. The facilitator explains thinking aloud and asks if the participant has any additional questions. The facilitator explains where to start.

- The participant reads the task scenario and begins working on the scenario while they think aloud. The note-takers take notes of the participant’s behaviors, comments, errors and completion (success or failure).

- The session continues until all task scenarios are completed or time allotted has elapsed. The facilitator asks the end-of session subjective questions, thanks the participant, gives the participant the agreed-on incentive, and escorts them from the testing environment.

Test Metrics

There are several metrics that you will want to collect and that you identified in the usability test plan.

Successful Task Completion

Each scenario requires the participant to obtain specific data that would be used in a typical task. The scenario is successfully completed when the participant indicates they have found the answer or completed the task goal.

In some cases, you may want give participants multiple-choice questions. Remember to include the questions and answers in the test plan and provide them to note-takers and observers.

Critical & Non-Critical Errors

Critical errors are deviations at completion from the targets of the scenario. For example, reporting the wrong data value due to the participant’s workflow. Essentially the participant won’t be able to finish the task. Participant may or may not be aware that the task goal is incorrect or incomplete.

Non-critical errors are errors that are recovered by the participant and do not result in the participant’s ability to successfully complete the task. These errors result in the task being completed less efficiently. For example, exploratory behavior such as opening the wrong navigation menu item or using a control incorrectly are non-critical errors.

Error-Free Rate

Error-free rate is the percentage of test participants who complete the task without any errors (critical or non-critical errors).

Time On Task

The amount of time it takes the participant to complete the task.

Subjective Measures

These evaluations are self-reported participant ratings for satisfaction, ease of use, ease of finding information, etc where participants rate the measure on a 5 to 7-point Likert scale.

Likes, Dislikes and Recommendations

Participants provide what they likes most about the site, what they liked least about the site, and recommendations for improving the site.

Data Analysis

At the end of the usability test you will have several types of data (depending on the metrics you collected). You should have quantitative data: success rates, task time, error rates, and satisfaction questionnaire ratings. Your qualitative data might include: observations about pathways participants took, problems experienced, comments, and answers to open-ended questions.

Quantitative Data

- Enter the data in a spreadsheet to perform calculations, such as:

- percentage of participants who succeeded or not at each task

- average time to complete tasks

- frequency of specific problems

You may want to add participant’s demographic data so that you can sort by demographics to see if any of the data differ by the demographic variables. Make sure you identify the task scenarios for each of the metrics.

Qualitative Data

Read through the notes carefully looking for patterns. Add a description of each of the problems. Looks for trends and keep a count of problems that occurred across participants. Make sure your problem statements are exact and concise. For example:

Good problem statement: Clicked on link to Research instead of Clinical Trials.

Poor problem statement: Clicked on wrong link.

Poor problem statement: Was confused about links.

Reporting

A good report should present the just enough detail so that the method can be repeated in subsequent tests. Keep the sections short and use lots of tables to display the metrics. Focus on the finding and recommendations and use visual examples to demonstrate problem areas.

Background Summary

- Include a brief summary including what you tested (Web site or web application), where and when the test was held, equipment information, what you did during the test (include all testing materials as an appendix), the testing team, and a brief description of the problems.

Methodology

- Include the test methodology so that others can recreate the test. Explain how you conducted the test by describing the test sessions, the type of interface tested, metrics collected, and an overview of task scenarios.

- Describe the participants and provide summary tables of the background/demographic questionnaire responses (e.g., age, professions, internet usage, site visited, etc.). Provide brief summaries of the demographic data.

Test Results

- Describe what the facilitator and data loggers recorded. Depending on the metrics you collected you may want to show:

- the number and percent of participants who completed each scenario, and all scenarios (a bar chart often works well for this)

- the average time taken to complete each scenario for those who completed the scenario

- the satisfaction results

- Describe the tasks that had the highest and lowest completion rates. Provide a summary of the successful task completion rates by participant, task, and average success rate by task and show the data in a table. Follow the same model for all metrics.

Findings and Recommendations

- List your findings and recommendations using all your data (quantitative and qualitative, notes and spreadsheets). Each finding should have a basis in data—in what you actually saw and heard.

- You may want to have just one overall list of findings and recommendations or you may want to have findings and recommendations scenario by scenario, or you may want to have both: a list of major findings and recommendations that cut across scenarios as well as a scenario-by-scenario report

Report Positive Findings

- Although most usability test reports focus on problems, it is also useful to report positive findings. What is working well must be maintained through further development. An entirely negative report can be disheartening; it helps the team to know when there is a lot about the Web site that is going well.

Link Findings and Recommendations

- Each finding should include as specific a statement of the situation as possible. Each finding (or group of related findings) should include recommendations on what to do.

Provide a Severity Rating

- If you marked problems in your analysis as local/global and with a severity level, report those.

Goals of Usability testing

Usability testing is a black-box testing technique. The aim is to observe people using the product to discover errors and areas of improvement. Usability testing generally involves measuring how well test subjects respond in four areas: efficiency, accuracy, recall, and emotional response. The results of the first test can be treated as a baseline or control measurement; all subsequent tests can then be compared to the baseline to indicate improvement.

- Efficiency : How much time, and how many steps, are required for people to complete basic tasks? (For example, find something to buy, create a new account, and order the item.)

- Accuracy : How many mistakes did people make? (And were they fatal or recoverable with the right information?)

- Recall : How much does the person remember afterwards or after periods of non-use?

- Emotional response : How does the person feel about the tasks completed? Is the person confident, stressed? Would the user recommend this system to a friend?

To assess the usability of the system under usability testing, quantitative and/or qualitative usability goals (also called usability requirements) have to be defined beforehand. If the results of the usability testing meet the usability goals, the system can be considered as usable for the end-users whose representatives have tested it.